Archive

Tricks to debug Captivate SCORM 1.2 content

If you have to make Captivate-generated SCORM 1.2 content in your (web) platform and you want *more* information about what’s going on, this guide might help.

One of the trickiest things about Captivate and SCORM is that Captivate doesn’t handle the credit/no-credit (cmi.core.credit) very well. This parameter, in combination with cmi.core.lesson_mode should let you retake exercises that you have already completed and passed.

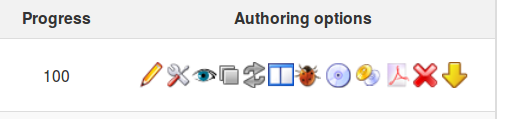

In Chamilo, if you want to debug the SCORM interactions on the Chamilo side, use Firefox, login as admin, go to the learning paths list and click the ladybug icon in the action icons next to the learning path you want to debug. This will only affect you, so no worries about doing that in production.

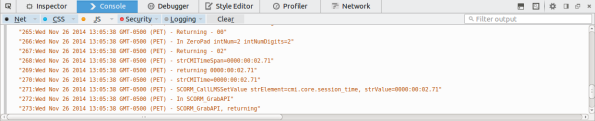

Then enter the learning path itselt (simply click its name). Once you’re seeing the content, launch the Firefox debugger with SHIFT+F2 and go to the “Console” tab. Click on any item in the table of contents, and you should see the SCORM and LMS interactions pooring in. Something like the screenshot below.

Then enter the learning path itselt (simply click its name). Once you’re seeing the content, launch the Firefox debugger with SHIFT+F2 and go to the “Console” tab. Click on any item in the table of contents, and you should see the SCORM and LMS interactions pooring in. Something like the screenshot below.

This is already very nice to understand the interaction going on, and when Chamilo will save the information in its database, but it still lacks the possibility to understand what’s going on, on the Captivate side of things.

Captivate itself has debug features though, but they’re not prepared for just any tool. Luckily, we can tap into them with a moderate level of complexity.

First, you need to know where the Captivate content is on disk in your LMS. In Chamilo, this is typically in the {root-folder}/courses/{course-code}/scorm/{scorm-name}/{scorm-name}. For Captivate content, Chamilo usually generates its own folder, then Captivate, which means you have it duplicated.

You’ll have to get into that folder and, for each SCO (item in the table of content in the screen above), locate the scormdriver.js file.

Around line 1032 of scormdriver.js, you’ll find something like this:

function WriteToDebug(strInfo){if(blnDebug){var dtm=new Date();var strLine;strLine=aryDebug.length+”:”+dtm.toString()+” – “+strInfo;aryDebug[aryDebug.length]=strLine;if(winDebug&&!winDebug.closed){winDebug.document.write(strLine+”<br>\n”);}}

return;}

We’ll hack into that and just replace it straight away (keep a backup copy if you’re afraid) by the following line:

function WriteToDebug(strInfo){var dtm=new Date();var strLine;strLine=aryDebug.length+”:”+dtm.toString()+” – “+strInfo;aryDebug[aryDebug.length]=strLine; console.log(strLine); return;}

This will simply:

- force the debug to be active (we don’t check blnDebug anymore, we assume it’s on!)

- redirect the debug messages from this weird winDebug.document to the officially-supported-in-all-reasonnable-browsers “console.log”, which prints the log in the browser console, as illustrated above

Now, to get this to work, you need to clean the cache in your browser. My favorite way of doing this in Chamilo is to press CTRL+F5, then go *back* to the learning paths list, enter *another* learning path, then enter the hacked learning path again. Captivate debug information should start showing in your browser’s console:

Now you can analyze all the information flow.

That’s all, folks!

Como ignorar los cambios de permisos (chmod) en Git

Por si os preguntais alguna vez como evitar que Git registre los cambios de permisos o de “modo” de los archivos, aquí va una pequeña explicación que debería ayudaros.

La respuesta corta, como indicado en un post interesante de Stackoverflow, es de posicionarse dentro de la carpeta del repositorio Git local, en línea de comando, y lanzar:

git config core.fileMode falseSi se desea hacer esto una sola vez (para un solo comando) en vez de configurarlo para todos los commits futuros, hay que lanzar el comando:

git -c core.fileMode=false diffSi ha cambio desea cambiarlo de una sola vez para *todos* sus repositorios, hay que hacer:

git config --global core.filemode falseo

git config --add --global core.filemode falseLuego, si desea retroceder algun cambio de permisos (para archivos y carpetas sucesivamente):

git diff --summary | grep --color 'mode change 100755 => 100644' \

| cut -d' ' -f7- | xargs -d'\n' chmod +x

git diff --summary | grep --color 'mode change 100644 => 100755' \

| cut -d' ' -f7- | xargs -d'\n' chmod -xEste artículo es una mera traducción resumida del post en Stackoverflow, así que si quereis más detalles, seguid el enlace de arriba.

MySQL and MariaDB innodb_file_per_table

For those of you pushing your MySQL instances to higher levels, you’ll have realized at some point that all InnoDB tables share their indexing space into a single file (ibdata1, usually).

This has several very bad outcomes:

- the ibdata1 file becomes huge after some time (we’ve seen it pass the 8GB bar)

- starting the MySQL server takes longer

- deleting an InnoDB table *doesn’t* reduce the space used by ibdata1

- you have to shutdown your server, remove ibdata1 completely, then restart the server in order to reinitialize the ibdata1 file to a 0-size file (but this is, of course, difficult or dangerous to do in a high-availability context)

I just found there was a setting called “innodb_file_per_table” since MySQL 5.1 at least:

http://dev.mysql.com/doc/refman/5.5/en/innodb-parameters.html#sysvar_innodb_file_per_table

There is an explanation on what the advantages and disadvantages of the method are (but as you might have guessed, the main disadvantage of using separate files is that you have more files, taking a little more time to do I/O operations when you scan them all – and of course retro-compatibility with previous versions of MySQL)

http://dev.mysql.com/doc/refman/5.1/en/innodb-multiple-tablespaces.html

This seems to be available in MariaDB as well.

Using php5-memcached to store sessions on distributed servers

This article is an extension of the previous article about storing sessions in Memcached with PHP.

Using Memcached for sessions storage is generally a matter of speed (storing them in memory is faster than on disk) and of scalability (using a Memcached server allows you to have several web servers serving the same PHP application in a seamless way).

In the previous article, we mentioned (3 years ago) that the php5-memcached extension did not seem to manage the storage of sessions quite well, or at least that the configuration of such setup was not well documented. This time, we’ve been able to do it with php5-memcached. This time also, we’re talking about distributing Memcached on several servers. Typically, in a cloud environment with several web servers and no dedicated memcached server, or a need for some kind of fail-over solution for the memcached server. We’re setting this up on 3 Ubuntu 14.04 servers in 64bit that act as load-balanced web servers behind a load balancer managed by Nginx. If you use another distribution or operating system, you might need to adapt the commands we’re showing here.

Installing Memcached + PHP

So the few commands you need to launch first are to install the required software, on the 3 web servers (you can either do that on one of them then replicate the image if you are using Cloud instances, or you can install simultaneously on your 3 web servers using ClusterSSH, for example, with the cssh server1 server2 server3 command) :

sudo apt-get install memcached php5-memcached sudo service apache2 restart

The second command is to make sure Apache understands the php5-memcached extension is there.

In order to enable the connection to Memcached from the different load-balanced servers, we need to change the Memcached configuration to listen on the external IP address. Check the IP address with /sbin/ifconfig. The Memcached configuration file is located in /etc/memcached.conf. Locate the “-l” option and change “127.0.0.1” for your external IP, then save and close the file. Note that this might introduce a security flaw, where you are possibly opening the connection to your Memcached server to the outside world. You can prevent that using a firewall (iptables is a big classic, available on Ubuntu)

Next, restart the Memcached daemon:

sudo service memcached restart

Now you have a Memcached server running on each of your web servers, accessible from the other web servers. To test this, connect to any of your web servers and try a telnet connection on the default Memcached port: 11211, like so:

user@server1$ telnet ip-server2 11211

To get out of there, just type “quit” and Enter.

OK, so now we have 3 Memcached servers, we only need to wrap up configuring PHP to use these Memcached servers to store sessions.

Configuring PHP to use Memcached as session storage

This is done by editing your Apache VirtualHost files (on each web server) and adding (before the closing </VirtualHost> tag) the following PHP settings:

php_admin_value session.save_handler memcached php_admin_value session.save_path "ip-server1:11211,ip-server2:11211,ip-server3:11211"

Now reload your web server:

sudo service apache2 reload

You should now be able to connect to your web application using the distributed Memcached server as a session storage (you usually don’t need to change anything in your application itself, but some might exceptionally define their own session storage policy).

The dangers of using a distributed Memcached server

Apart from the possible open access to your Memcached server previously mentioned, which is particularly security-related, you have to take another danger, mostly high-availability related, into account.

When using a distributed Memcached server configuration, it is important to understand that it works as sharded spaces configuration. That is, it doesn’t store the same sessions over on the various available Memcached server. It only stores each single session in one single server. The decision of where it will store the session is out of the context of this article, but it means that, if you have 300 users with active sessions on your system at any one time, and one of your web servers goes down, you still have 2 web servers and 2 Memcached servers, but ultimately around 100 users will loose their session (that was stored on the web server that went down).

Worst: the PHP configuration will not understand this, and still try to send sessions to the server that was considered to hold these 100 sessions, making it impossible for the users to login again until the corresponding Memcached server is back up (unless you change the configuration in your PHP configuration).

This is why you have to consider 2 things, and why this article is just one step in the right direction:

- you should configure the Memcached servers from inside your application for the sessions management (as such, you should have a save_handler defined inside it and check for the availability of each server *before* you store the session in it)

- if your sessions are critical, you should always have some kind of data persistence mechanism, whereby (for example), you store the session in the database once every ten times it is modified

We hope this was of some use to you in understanding how to use Memcached for sessions storage in PHP. Please don’t hesitate to leave questions or comments below.

How to not write a condition for code readability

Sometimes you find a perl in some code and it might take you a while to understand.

In the sessions management code of Chamilo, I found this the other day, then decided to leave it for later because I was in a hurry. Then today, I found it again, and again… it took me about 10 minutes to make sure of how the code was wrong and how to make it right.

if ( $session->has('starttime') && $session->is_valid()) {

$session->destroy();

} else {

$session->write('starttime', time());

}

To give you the context, we want to know whether we should destroy the session because its time is expired, or whether to extend the period of time for which it is valid (because it is still active now, and we just refreshed a page, which lead us to this piece of code).

OK, so as you can see, we check if the session has a value called “starttime”. If it hasn’t we pass to the “else” statement and define one, starting from now.

If, however, the session has a start time, we check whether it… “is valid” (that’s what the function’s called by, right?) and, if it is… we destroy the session.

Uh… what?

Yeah… if it is valid, we destroy it (DIE SESSION, DIE!)

OK, so something’s wrong here. Let’s check that “is_valid()” method (there was documentation, but it was wrong as well, so let’s avoid more confusion)

public function is_valid()

{

return !$this->is_stalled();

}

function is_stalled()

{

return $this->end_time() >= time();

}

OK, so as you can see, is_valid() is the boolean opposite of is_stalled(). “Is stalled”, in my understanding of English (and I checked a dictionnary, just to make sure) means “blocked” (in some way or another). Now it doesn’t really apply to something that is either “valid” or “expired”, either. And this is part of the problem.

The is_stalled() method, here, checks if the end_time for the session is greater than the current time. Obviously, we’ll agree (between you and me) that if the expiration time is greater (further in the future) than the current time, this means that we still have time before it expires, right?

Which means that, if the condition in is_stalled() is matched, we are actually *not* stalled at all. We are good to go.

So I believe the issue here was the developer either confusing the word “stalled” for something else or, as it doesn’t really apply to “expired” or “not expired”, that he simply badly picked the word describing the state.

In any case, now that we’ve reviewed these few lines of code, I’m sure you’ll agree it would be much clearer this way:

/* caller code */

if ( $session->has('starttime') && $session->is_expired()) {

$session->destroy();

} else {

$session->write('starttime', time());

}

/* called method */

public function is_expired()

{

return $this->end_time() < time();

}

Conclusion: always choose your functions naming carefully. Others *need* to be able to understand your code in order to extend it.

Creating multiple git forks using upstream branches

Working with Git is… complex. It’s not that it’s from another world, and the complexity is probably worth it considering the crazy things it allows you to do, but sometimes it’s just mind-bloggingly complex to understand how to do things right.

Recently, we’ve had to manage a series of projects with changes that cannot be applied directly to our original Git repository on Github, so we decided, after giving it some thought, to make several forks of the project (instead of branches), to manage more clearly permissions and the real intentions behind each of the forks.

This would allow us a few important things:

- define precisely permissions based on the repository

- use the repository to pull changes directly on our servers

- have some level of local customization on each server, using local commits (and subsequent merges)

- keep easy track of the changes that have been made on requests from some of our “customers” (they’re not necessarily commercial customers – sometimes they’re just users who decided to go crazy and show us what they can do, and we want to show that in public)

To do that, we need to fork our project (chamilo/chamilo-lms) several times over.

So the first problem is: you cannot fork the same project twice with the same user.

Adrian Short (@adrianshort) kind of solved that issue in his blog article, saying that you can just do the fork manually, creating an empty repo on Github and filling it with a copy of the main repo, then adding the origin remote manually.

Even though that works, the Github page (starting from the second fork with the same user) will *not* indicate it has been forked from chamilo/chamilo-lms: you’ll have to issue the

git remote -v

command to see that the upstream is correct.

The second problem is that the article doesn’t dive into the details of branch-level forks, so this article intends to solve that particular missing detail, and in that respect, the Github help is definitely useful. Check Syncing a fork help page if you want the crunchy details.

To make it short, there are 3 issues here:

- fetching the branches from the original repo (upstream)

- getting the desired branch to be checked out into your local repo

- defining that, from now on, you want to work on your repo as a fork of that specific branch in upstream (to be able to sync with it later on)

The complete procedure then, considering my personal account (ywarnier) and the original chamilo/chamilo-lms project on Github, with the intention to work on branch 1.9.x, would look like this:

git clone git@github.com:chamilo/chamilo-lms

git remote -v

git remote rename origin upstream

(here you have to create the “chamilo-lms-fork1” repo by hand in your Git account)

git remote add origin git@github.com:ywarnier/chamilo-lms-fork1.git

git remote -v

git push -u origin master

git branch -va

git fetch upstream

git branch -va

git checkout --track remotes/upstream/1.9.x

This should get you up and kicking with your multiple forks in a relatively short time, hopefully!

On PHP and cache slams and solutions

While reading about Doctrine’s cache mechanism (which applies to other stuff than database queries, by the way), my eye was caught by a little message at the end (last section) about cache slams.

I have used cache mechanisms extensively over the last few years, but (maybe luckily) never happened to witness a “cache slam”.

There’s a link to a blog (by an unnamed author) that explains that.

To make it short, you can have race conditions in APC (and probably in other caching mechanisms in PHP) when you assign a specific time for expiry of cache data, and a user gets to that expiry time at the same time (or very very very closely) as other users. This provokes a chain reaction (a little bit like an atomic bomb, but not with the same effect – unless some crazy military scientist binds a high-traffic website to the initiation process of an atomic bomb) which makes your website eat all memory and freeze (or something like that).

In reply to me mentioning it on Twitter, @PierreJoye (from the PHP development team) kindly pointed me to APCu, which is a user-land caching mechanism (or so to speak an APC without the opcode, and simplified).

Apparently, this one doesn’t have the cache slam issue (although I haven’t checked it myself, I have faith in Pierre here) and it’s already in PECL (still in beta though), so if you want to try it out on Debian/Ubuntu, you will probably be able to sort it out with a simple:

sudo apt-get install php5-dev php5-pear make

sudo pecl install APCu

(and then configure your PHP to include it).

Don’t forget that it is a PECL library, meaning it’s most likely you’ll have to recompile your PHP to enable it, but PECL should handle that just fine (in our case it’s a bit more complicated if we want to avoid asking our users – of Chamilo, that is – for more dependencies).

Anyway, just so you know, there are people like that who like to make the world a better place for us, PHP developers, so that we can make the world a better place developing professional-grade, super-efficient free software in PHP! Don’t miss out on contributing to that!

Debian/Ubuntu: replicar lista de paquetes a otra máquina

Si alguna vez compraste una nueva máquina y quisiste instalar la lista de programas instalados en tu anterior, sin tener una técnica especial para hacerlo, entenderás porque me interesa mucho esta técnica en Ubuntu para automatizar el proceso…

Primero, en nuestra máquina “antigua”, usamos apt-get para generar una lista de todos los paquetes instalados en un archivo llamado paquetes.txt:

dpkg --get-selections | grep -v deinstall > paquetes.txt

Después, en la nueva máquina, actualizamos la lista de fuentes de paquetes y usamos apt-get para instalar desde nuestro archivo (que podemos copiar por un comando scp o simplemente enviárselo por correo):

apt-get update dpkg --set-selections < paquetes.txt apt-get -u dselect-upgrade

Y ya está! Solo hay que dejarlo descargar.

Si, además, quieres recuperar todo lo que tenías de personal en la otra máquina, puedes también copiar tu carpeta home con el siguiente comando desde la nueva máquina:

cd; rsync -avz usuario@maquina-anterior:/home/usuario/ .

Para que funcione sin problema, vale mejor guardar el mismo nombre de usuario que usabas en la máquina anterior. Sino podría generar problemas de permisos.

Se recomienda primero recuperar la carpeta de usuarios y *después* instalar los paquetes. Así, la instalación tomará en cuenta los posibles parámetros importantes al momento de arrancar estas aplicaciones.

Nginx + CDN + GoogleBot or how to avoid many useless Googlebot hits

If you’re like me and you’ve developed a CDN distribution for your website’s content (while waiting for SPDY to be widely adopted and available in mainstream distributions), you might have noted that the Googlebot is frequently scanning your CDNs, and this might have made your website a bit overloaded.

After all, the goal of the CDNs are (several but in my case only) to elegantly distribute contents across subdomains so your browser will load the page resources faster (otherwise it gets blocked by the HTTP limit or any higher limit set by your browser of simultaneous content download).

Hell, in my case, this is the number of page scans per day originating from the Googlebot on only one of my CDN-enabled sites (I think there are like 5 different subdomains). And these are only the IPs that requested the site the most:

3398: 66.249.73.186

1380: 66.249.73.27

1328: 66.249.73.15

1279: 66.249.73.214

1277: 66.249.73.179

1109: 66.249.73.181

1109: 66.249.73.48

1015: 66.249.73.38

822: 66.249.73.112

738: 66.249.73.182

As you can see, it sums up to about 13,000 requests in just 24h. On the main site (the www. prefixed one), I still get 10,000 requests per day from the Googlebot.

So if you want to avoid that, fixing it in Apache is out of the scope here, but you could easily do it with a RewriteCond line.

Doing it in Nginx should be relatively easy if you have different virtual host files for your main site and the CDN (which is recommended as they generally have different caching behaviour, etc). Find the top “location” block in your Nginx configuration. In my case, it looks like this:

location / {

index index.php index.html index.htm;

try_files $uri $uri/ @rewrite;

}

Change it to the following (chang yoursite.com by the name of your site):

location / {

index index.php index.html index.htm;

# Avoid Googlebot in here

if ($http_user_agent ~ Googlebot) {

return 301 http://www.yoursite.com.pe$request_uri;

}

try_files $uri $uri/ @rewrite;

}

Reload your Nginx configuration and… done.

To test it, use the User Agent Switcher extension for Firefox. Beware that your browser generally uses DNS caching, so if you have already loaded the page, you will probably have to restart your browser (or maybe use a new browser instance with firefox –no-remote and install the extension in that one *before* loading the page).

Once the extension is installed, choose one of the Googlebot user agents in Tools -> Default User Agent -> Spider – Search, then load your cdn page: you should get redirected to the www page straight away.

Google’s new terms to reduce spamming is really taking effect

I don’t know about you, but in the latest months, I’ve received a series of requests which follow the same pattern:

LINK REMOVAL REQUEST

Dear Website Owner:

It has come to our attention that a number of links exist on your domain which send traffic to our website [theirdomain.com]. We have determined that these links may be harmful either to the future marketing and reputation of [theirdomain.com], or to our users.Accordingly, we kindly request that you (A) remove all existing links to [theirdomain.com] from your domain, including, but not limited to the following URLs:

http://www.mydomain.com/…

(B) Cease creation of any additional links to [theirdomain.com]

(C) provide us with prompt notification once links to [theirdomain.com] have been removed by return email.

We do apologise for this inconvenience but [theirdomain.com] has been warned by Google of quality issues with their backlinks and we need to remove link that are simply not a good fit for [theirdomain.com].

Thanking you in anticipation of your co-operation in removing these links at your earliest convenience.

If you have a forum, if you’ve followed a little bit about this SEO stuff and the new rules used by Google, basically you’ll realize that these sites originally hired very cheap human spammers to come and fill (and I really mean fill, like filled-to-the-top-with-something-still-on-top-of-the-top kind of filling) your forum with crappy messages linking to their site to try and help their ranking get better so they could sell more than lawful businesses while not bothering about anything.

Now that Google changed their rules, these links act in the opposite way, bringing down the ranking of those sites which have used unlawful/unethical practices to promote their sites in the first place, and now they have the nerves of still asking you to remove their crap! This is my suggested answer to them.

Dear Sirs,

Our site has suffered for several months of moral damages (we have several e-mails of complaints from our users) and countless hours lost, caused by your clearly intentional, clearly unethical low-cost spamming campaign in order to cheat the system and bring your site on top of others.

Now that Google changed the rules and that these same links are harmful to you, you are requesting us to spend valuable time cleaning your mess, which was created by your organization’s very obvious lack of good intentions and lack of respect for others.

Chamilo is an educational free software and a non-profit association which’s reputation has suffered severely from your past actions. However, considering the damages caused, we will consider removing those links in exchange of a US$20,000 fee (paid in advance) + our normal rate of US$xxx/h charge (which will depend on the amount of time it will take us to remove the links). The money received from this cleaning operation will be used to benefit the development of the free Chamilo LMS application.

Best regards,

YW

I don’t know about you, but I’m actually even wondering if I should give them the opportunity at all… Come on, you cannot honestly let people like this on the loose, destroying the good sides of Our Internet…

Update 20130814: Actually, a more interesting answer might be “We are not responsible of the content put on our website by third parties. You are welcome to contact each such third party to ask them to remove the links they placed on our website, apparently infringing our policies of fair use.”